I read Paul Edward’s A Vast Machine this summer while working with Steve Easterbrook. It was highly relevant to my research, but I would recommend it to anyone interested in climate change or mathematical modelling. Think The Discovery of Global Warming, but more specialized.

I read Paul Edward’s A Vast Machine this summer while working with Steve Easterbrook. It was highly relevant to my research, but I would recommend it to anyone interested in climate change or mathematical modelling. Think The Discovery of Global Warming, but more specialized.

Much of the public seems to perceive observational data as superior to scientific models. The U.S. government has even attempted to mandate that research institutions focus on data above models, as if it is somehow more trustworthy. This is not the case. Data can have just as many problems as models, and when the two disagree, either could be wrong. For example, in a high school physics lab, I once calculated the acceleration due to gravity to be about 30 m/s2. There was nothing wrong with Newton’s Laws of Motion – our instrumentation was just faulty.

Additionally, data and models are inextricably linked. In meteorology, GCMs produce forecasts from observational data, but that same data from surface stations was fed through a series of algorithms – a model for interpolation – to make it cover an entire region. “Without models, there are no data,” Edwards proclaims, and he makes a convincing case.

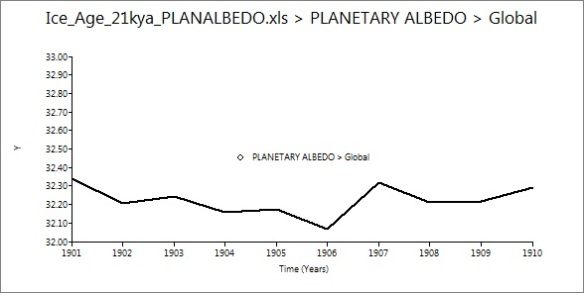

The majority of the book discussed the history of climate modelling, from the 1800s until today. There was Arrhenius, followed by Angstrom who seemed to discredit the entire greenhouse theory, which was not revived until Callendar came along in the 1930s with a better spectroscope. There was the question of the ice ages, and the mistaken perception that forcing from CO2 and forcing from orbital changes (the Milankovitch model) were mutually exclusive.

For decades, those who studied the atmosphere were split into three groups, with three different strategies. Forecasters needed speed in their predictions, so they used intuition and historical analogues rather than numerical methods. Theoretical meteorologists wanted to understand weather using physics, but numerical methods for solving differential equations didn’t exist yet, so nothing was actually calculated. Empiricists thought the system was too complex for any kind of theory, so they just described climate using statistics, and didn’t worry about large-scale explanations.

The three groups began to merge as the computer age dawned and large amounts of calculations became feasible. Punch-cards came first, speeding up numerical forecasting considerably, but not enough to make it practical. ENIAC, the first model on a digital computer, allowed simulations to run as fast as real time (today the model can run on a phone, and 24 hours are simulated in less than a second).

Before long, theoretical meteorologists “inherited” the field of climatology. Large research institutions, such as NCAR, formed in an attempt to pool computing resources. With incredibly simplistic models and primitive computers (2-3 KB storage), the physicists were able to generate simulations that looked somewhat like the real world: Hadley cells, trade winds, and so on.

There were three main fronts for progress in atmospheric modelling: better numerical methods, which decreased errors from approximation; higher resolution models with more gridpoints; and higher complexity, including more physical processes. As well as forecast GCMs, which are initialized with observations and run at maximum resolution for about a week of simulated time, scientists developed climate GCMs. These didn’t use any observational data at all; instead, the “spin-up” process fed known forcings into a static Earth, started the planet spinning, and waited until it settled down into a complex climate and circulation that looked a lot like the real world. There was still tension between empiricism and theory in models, as some factors were parameterized rather than being included in the spin-up.

The Cold War, despite what it did to international relations, brought tremendous benefits to atmospheric science. Much of our understanding of the atmosphere and the observation infrastructure traces back to this period, when governments were monitoring nuclear fallout, spying on enemy countries with satellites, and considering small-scale geoengineering as warfare.

I appreciated how up-to-date this book was, as it discussed AR4, the MSU “satellites show cooling!” controversy, Watt’s Up With That, and the Republican anti-science movement. In particular, Edwards emphasized the distinction between skepticism for scientific purposes and skepticism for political purposes. “Does this mean we should pay no attention to alternative explanations or stop checking the data?” he writes. “As a matter of science, no…As a matter of policy, yes.”

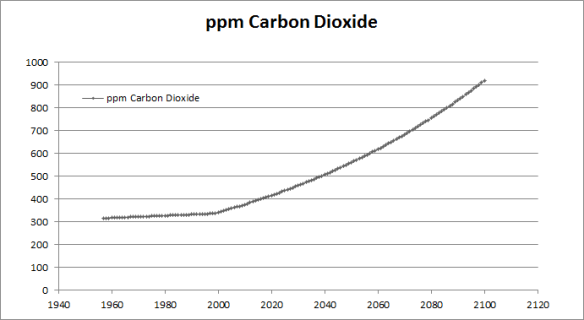

Another passage beautifully sums up the entire narrative: “Like data about the climate’s past, model predictions of its future shimmer. Climate knowledge is probabilistic. You will never get a single definitive picture, either of exactly how much the climate has already changed or of how much it will change in the future. What you will get, instead, is a range. What the range tells you is that “no change at all” is simply not in the cards, and that something closer to the high end of the range – a climate catastrophe – looks all the more likely as time goes on.”